Data Preprocessing

Data Preprocessing is the initial step when programming a machine learning model. It consists of importing the required libraries, importing your datasets, taking care of missing data, encoding categorical data, splitting datasets into training and testing, and finally feature scaling.

Importing Libraries

In Python, importing is done by using the keyword import followed by the name of the library (e.g. numpy) followed by an abbreviation of the library:

example

| import numpy as np |

Frequently Used Libraries

Here is a list of Frequently Used Libraries

and brief descriptions of how they are used in machine learning

Importing Your Dataset

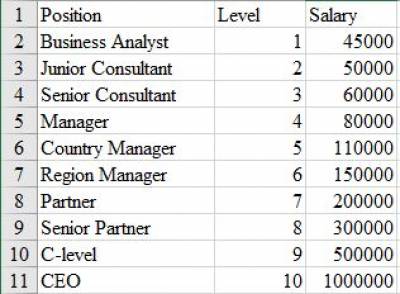

Let's say that we have a spreadsheet that looks like this:

Since the level corresponds to the position, we don't care about the first column. Let's say that the Salary is the dependent variable and Level is the independent variable that Salary depends on.

Step 1: Import the Data Importing the data is done using the Pandas library:

| dataset = pd.read_csv('nameOfDatasheet.csv') |

Step 2: Select the values iloc is a function in the pandas library that locates values from a csv file based on the indexes given in the arguments. Since we want the independent variable, x, to get all the rows and just the second column we can find the values like this:

| x = dataset.iloc[:, 1:-1].values |

Similarly since we want all the rows for the dependent variable and just the last column we can find the values like this:

| y = dataset.iloc[:, -1].values |

So within the “[]” of the iloc function, the first argument corresponds to the values in the rows and the second argument corresponds to the values in the columns.

Notice that we use “-1” to represent the final column. Furthermore the “1:-1” refers to every column between the first and last column. If we want to take the every column except for the last one then we would do “:-1”.

Taking Care of Missing Data

If your data has missing components there are multiple ways that we can handle it.

- You can completely ignore and remove that data entry. This won't cause problems to large datasets because you aren't removing a large part of the dataset.

- You can enter the average of all the values in that category into the cell that is missing the data. In the case where the dataset is small or you are missing a lot of data points, we cannot simply remove the data because that would significantly reduce the quality of our training model. To do this we have to use a tool from scikitlearn called the simple imputer.

SimpleImputer

The SimpleImputer function simply replaces array cells that are missing data with a value dependent on the values in that category. SimpleImputer can use the mean, median, or mode of that category or it can simply input a constant value.

Here's how to implement the SimpleImputer:

| from sklearn.impute import Simple Imputer |

| imputer = SimpleImputer(missing_values = np.nan, strategy = 'mean') |

Encoding Categorical Data

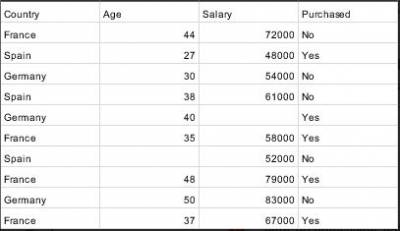

When you have data that is not a numerical value and it's a category that we cannot ignore then we need to turn it into data that is numerical while also not in an ordered format (e.g. 0, 1, 2). Let's take the following dataset:

In the column on the left we will need to encode this data because it correlates countries to the ages and salaries columns. If we do not turn these strings into numerical values then that could cause errors in the machine learning model. One option to encode the data is to use a simple consecutive numerical format (e.g. 0, 1, 2). So France = 0, Spain = 1, Germany = 2. However, we don't want this either because the machine learning model could conclude that the order of this data matters, which in this case it doesn't. So we need a method to encode the data without causing this error. The method that we will use is called one hot encoding.

One Hot Encoding

One hot encoding simply takes a set of variables/strings and creates a vector with n dimensions equal to the amount of variables in the set and then assigns each variable/string to one unique space in that vector. Using the same dataset we will take the three countries, which means we will have a vector with n = 3 and it will look like this:

| [(France) (Spain) (Germany)] |

When we are calling one country we will be setting the corresponding space equal to 1 and all other spaces equal to 0:

| France: | [1 0 0] |

| Spain: | [0 1 0] |

| Germany: | [0 0 1] |

To use the OneHotEncoding function in python we can use it from the scikitlearn library:

| from sklearn.compose import ColumnTransformer |

| from sklearn.preprocessing import OneHotEncoder |

| ct = ColumnTransformer(transformers=[('encoder', OneHotEncoder(), [0])], remainder = 'passthrough') |

| x = np.array(ct.fit_transform(x)) |

In this block of code we need to use the ColumnTransformer function because we are changing a specific column in the dataset and not the whole dataset. So the ColumnTransformer function is encoding using a one hot encoder on the 0th column (the first column).

fit, transform, and fit_transform

Usually with every augmentation that is done to the data or models we have to use a “fit” or “fit_transform” method.

- When we “fit” a function onto a dataset we are preparing to perform that function on the dataset according to the parameters and methods specified in that function in reference to the dimension size and character types of the dataset.

- When we “transform” we are performing the function on the dataset

- a “fit_transform” is doing the two in one line.

Label Encoding

Label encoding simple encodes variables in text formats into binary 0 and 1 values. In the data above we still have “No” and “Yes” in the final column, which is our depend variable (y vector). So we need to encode these values so that they are 0s and 1s. We could simply go into the excel sheet and change the “No” and “Yes” to 0 and 1 respectively, but with large datasets that would take very long so the LabelEncoder function is very useful for this task. To implement the LabelEncoder we use the sklearn library again:

| from sklearn.preprocessing import LabelEncoder |

| le = LabelEncoder() |

| y = le.fit_transform(y) |

Again, this “fit_transform” is fitting the LabelEncoder (le) onto y, then performs the LabelEncoder on y and then the output is set equal to y.

Splitting Datasets Into Training and Testing Data

When making ML models we have to have two different datasets: Training and Test data. In order to to train the regression or classification model we need a training set and then we need to validate, or test, that model on a test set that represents the target, or desired, output. We can simply split the data into a training and test set using scikitlearn:

| from sklearn.model_selection import train_test_split |

| X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.2, random_state = 1) |

In this case we can name the variables anything but this naming convention is simple and easy to understand. We can also change the test size, so if we wanted to train the model on 90% of the data we would set the test_size equal to 0.1. random_state simply controls the shuffling applied to the data before applying the split.

Feature Scaling

Feature scaling is a task that we need to perform when we have a wide range of values in our data. For example, take the dataset with the different countries, we need to use feature scaling because the salaries column is significantly greater than that of the age column. To feature scale we will simple use the StandardScaler function from the sklearn library. What StandardScaler does is it normalizes the data in a category according to its mean so that all the values are between -1 and 1.

| from sklearn.preprocessing import StandardScaler |

| sc = StandardScaler() |

| X_train[:, 3:] = sc.fit_transform(X_train[:, 3:]) |

| X_test[:, 3:] = sc.transform(X_test[:, 3:]) |

Authors

Contributing authors:

Created by jclaudio on 2020/09/28 21:56.