This is an old revision of the document!

Updated: 2/1/2016

Overview

Learn forecasting here! We'll go through some basic concepts.

Linear Regression

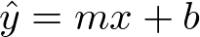

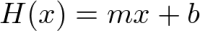

- Predict a

yvalue for everyx.y-hatis the predicted value. - Simple linear model with slope and intercept.

- Linear regression is a model creation procedure:

- Use

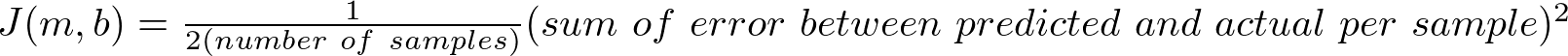

J, the cost function, to measure which models are better (with less error):

Jis arbitrary, but the one listed above is a rough overview of least-squares error. It is also referred to as Sum of Squared Error (SSE).

- There are different ways of optimizing

H(x)usingJ, including gradient descent, which involves using the gradient of J to find a point where all parts of the gradient are 0. - When the gradient is 0, the function given has the least amount of error.

- Catch: just because gradient = 0 doesn't mean that it's a global minimum!

- We can solve for the zero-point of the gradient using calculus and the partial derivative of

Jwith respect toxor each ofx1, x2, x3, etc..if there is more than one feature you use to findy.

Review

- Use the cost function, J, to optimize, H, the prediction model.

- Here, H is a linear function:

H(x) = mx + b. - J is often SSE/least square error.

- Apply linear algebra when the data grows to a larger amount of input features:

Y = AX + B.

K-Folds & Cross-Validation

- Split a group of data into

Kgroups of equal size. - Use a certain amount of groups as the training, then use the rest as test data.

- Use all combinations of training/test data across the folds to get multiple models.

- Aggregate the models somehow to get a final model.

Review

- Split the data between training and test, get models, aggregate models.

Correlation / Cross-Correlation

Coming soon…

Rich Regression

Coming soon…

K-Means Clusting

Coming soon…

Hierarchical Clustering

Coming soon…

Non-negative Matrix Factorization (NMF)

Coming soon…

Principal Component Analysis (PCA)

Coming soon…

Exploratory Factor Analysis (EFA)

Coming soon…

Normalization

Coming soon…

Non-Linear Regression

Coming soon…

Neural Networks

Coming soon…

Visualization

Coming soon…

Data & Density Distribution

Coming soon…