Running a service in production

I wanted to drop down some of my notes about running services in production - for many of you folks, this is probably one of the first times you'll be touching a service.

What is a service?

More generically, a service is something that is running somewhere on a machine (or a set of machines) somewhere that does something. For most folks, the most familiar thing is a website. Take hawaii.edu for example - it's a website but there is a service (or services) running somewhere that serves HTTP requests from user's browsers.

For our lab, the gateway is an example of a service; it's running in the background to collect sensor data and putting it into postgres.

Running a service

Since a service is just some program that's running on a machine, you could easily just start up on a desktop session, inside someone's terminal shell or something. This would work - the program would respond to requests. However, what happens if the machine restarts? What happens if the user logs out? And what happens if there is no one physically there to start the program again.

What if it crashes?

This is why most services use some sort of service configuration tool to manage the lifecycle of services. One such way is to use something like docker, and set a service to start automatically on startup. Another standard way that many services running on a linux machine use is systemd: https://www.freedesktop.org/wiki/Software/systemd/

As part of running a service in production, you want to make sure that the service stays up so it's important to use something like systemd to manage this.

Here's an example of a very simple systemd service file:

[Unit] Description=Example app After=network.target [Service] Type=simple User=scel WorkingDirectory=/home/example-user/example-app ExecStart=/home/example-user/example-app/run.sh --some-flag Restart=always [Install] WantedBy=multi-user.target

This could be installed in `/etc/systemd/system/example-app.service`

After installing it, you can reload the config:

systemctl daemon-reload systemctl start example-app.service

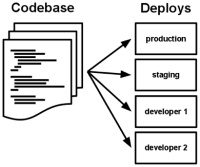

Deployments and version control

Services are rarely, if ever, static - they need to be updated with new changes and bugfixes. The process is called a deployment.

Deployments should be tied to some sort of version control (like git), or some sort of artifact repository like debian repositories. You want to avoid having deployment be freely editable by anyone with access to the machine, since changes will be hard to if not difficult to track.

Staging deployments

Often times when running production services you don't always want to push to production. You want some sort of environment prior to production that you can break if needed. Such an environment is called a staging environment and is pretty standard for many services.

To have a staging environment, you may need to mirror some of the configuration that you have in production. A staging environment can have a different database or database table; or even run on a completely different machine. There are various tradeoffs with how deep you want to go, so the details of an exact staging environment is up to the development team.

Further reading

I intended this page to be an intro of sorts for what you'll start with in the lab. A lot of the above sections are covered in the definition of a “12 factor app”. This is useful to read, as it covers a lot of the principals that get used in practice.

Authors

Contributing authors:

Created by kluong on 2022/10/07 15:51.